For the first time in nearly seven years, I am now unemployed. Yesterday, along with several other people, I was laid off from my job at The Omni Group, and I’m now looking for new work. UPDATE: Here is a link to my resume PDF and my complete CV. First of all, thank you to…

Tag: iPhone

iPhone 6 and 6 Plus Mockups

If you’re itching to see if the iPhone 6 Plus will fit in your pocket, I made these mockups (also including the regular-size iPhone 6) that you can print on a 3D printer.

Vertical Horizon

Ever since the iPhone first started shooting video, people have decried the use of the vertical orientation. Why would you do that? It looks so horrible! It’s unnatural! Hang on a moment while I pass judgement on you. Stop it. Let’s take a look at the history of film aspect ratios for a moment. Sure,…

Fingers, Virtual and Otherwise

Recently, a few developers were having a discussion on ADN about a promo video for a new iPad app. Uli Kusterer raised the point that the video was missing something: fingers. It was hard for him to tell what was a tap, and what was something that was merely happening as normal in the app….

FlintFone

This is the ringtone for Derek Flint’s direct line to the President in the 1966 movie, Our Man Flint, which was a sort of parody of the James Bond movies of the time. The ringtone would also be later used as the sound of the electronic handcuffs in Hudson Hawk.

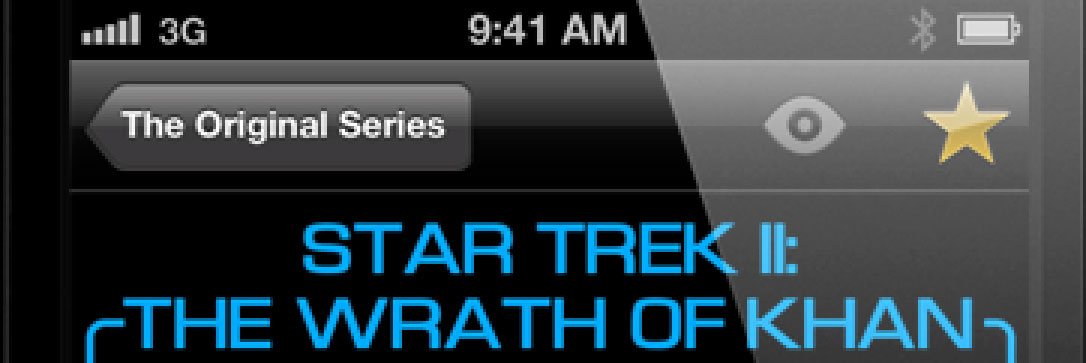

Post Atomic Horror Unofficial Star Trek™ Episode Guide

Hey, did I mention that I launched an iPhone app in the App Store a couple of weeks ago? I don’t think I did. The Post Atomic Horror Unofficial Episode Guide is a fun and humorous guide to the all of the Star Trek™ adventures featuring the original crew. It helps you keep track of…

How I learned Objective-C, Cocoa, and developed an iPhone App

As I just posted to 43 Things [a site that is sadly now dead — Ed.], I finally shipped my first public iPhone app, so maybe it’s time to look back at this journey and see how it’s gone so far. It seems like there’s always more and better to learn, but I learned enough…